Your support is needed and is appreciated as Amigaworld.net is primarily dependent upon the support of its users.

|

|

|

|

| Poster | Thread |  Heimdall Heimdall

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 4:16:58

| | [ #141 ] |

| |

|

Regular Member

|

Joined: 20-Jan-2025

Posts: 104

From: North Dakota | | |

|

| @bhabbott

Quote:

Karlos says "fdiv is a pretty lousy argument point because almost anyone writing performant code will be avoiding it as much as humanly possible.". But is that actually happening? I'm no expert on 3D rendering, but I looked at the source code for OpenLara and in the 'transform' routine it had this:-

c.x /= c.w;

c.y /= c.w;

c.z /= c.w;

Is this one of those cases where divide can't be avoided, or the compiler converts it to multiply, or is it just crappy code? If I was porting this code I wouldn't change anything that I didn't understand, which would be 99% of it. And even then it probably wouldn't work. |

The 3D transformation stage is a very small portion of the entire rendering pipeline with full screen texturing (like Quake or Tomb Raider)

I seriously doubt it's anywhere close to 10 % (TR is seriously lowpoly), so even if you removed it by refactoring it, you'd see very little performance gain.

Most likely they're computing this normalization to use the clip-space later for either some feature (you have to examine the rest of the code if it's using clip-space directly) or just to stay compliant with the standard approach simply because on the Pentium, it's not a big deal and it's not worth their time. Also, this makes it trivial to convert to opengl this way later.

With a little bit of work you can absolutely avoid this normalization division. I have been avoiding this division myself on Jaguar and Amiga in my engine, but obviously - with a generic 3D camera like in TR, there's benefits (and arguably requirements) for computing this.

Just look into any tutorial regarding projection transformation for explanation why this is useful. |

| | Status: Offline |

| |  Heimdall Heimdall

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 4:50:34

| | [ #142 ] |

| |

|

Regular Member

|

Joined: 20-Jan-2025

Posts: 104

From: North Dakota | | |

|

| @OneTimer1

Quote:

OneTimer1 wrote:

@Thread

I think some people should have their own forum for discussions, instead of turning a thread about the market for a game, into an endless discussion about CPU architecture. |

Wait,are you seriously complaining about slight OT in MY thread?

This is an extremely civilized discussion, I can assure you of that, especially compared to a certain third-world rabid Jaguar subforum on a certain Atari page (recently acquired by Atari themselves) that shall remain unnamed

Why? Because:

- there's nobody defecating in the thread , which is just unimaginable in itself!!!

- zero animated GIFs

- zero insults (with a "Like" counter next to the response header approaching 50  ) )

- zero admins abusing their privilege , editing posts and later removing certain portion of other posts to completely change the meaning of what you wrote, and then of course disabling your ability to edit the post (this should be illegal, but people are people)

It's probably hard for you to appreciate what you got on this forum, because it's so quiet and full of decent smart people mentally capable of expressing themselves while staying human in a civilized manner.

I am very grateful for this forum and I learn something new from every single post from Hammer and Matthey

|

| | Status: Offline |

| |  matthey matthey

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 5:23:40

| | [ #143 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2754

From: Kansas | | |

|

| Hammer Quote:

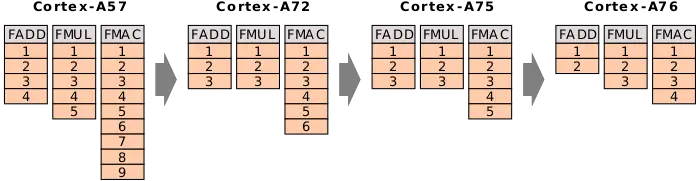

Other instructions have their clock cycle completion times.

68060

FPU is not pipelined.

FMUL has 3 to 5 clock cycles.

FADD has 3 to 5 clock cycles.

Pentium

FPU is pipelined.

FMUL has 3 cycles

FADD has 3 cycles

|

The pipelined Pentium FPU can only issue a FMUL instruction every other cycle and FXCH instructions are required to avoid FPU stack register dependencies. Performing FXCH in parallel with other FPU instructions instead of executing an integer instruction in parallel like the 68060 and Cyrix 6x86 was the only way to make FPU pipelining worthwhile with the horrible x86 FPU ISA.

Are you comparing best case, common case or worst case latencies? The best case and common case latency for both the 68060 and Pentium FMUL and FADD is 3 cycles. The worst case latency is much worse than 5 cycles for both which includes a load from memory. The 68060 latencies increase with some addressing modes that make the instruction larger than 6 bytes which is all that the ColdFire supports including the ColdFire FPU. The 68k base FPU instructions are mostly 4 bytes so the addressing modes that keep the instruction 6 bytes or less are (An), -(An), (An)+ and (d16,An) which are the most common ones but limiting, especially no fp immediates are allowed which are better in the more predictable code than loading as data. The limitation has nothing to do with the 4B/cycle instruction fetch but about how instructions are stored in the instruction buffer in order to minimize the buffer SRAM and perhaps dispatcher logic limitations. The design choice reduces core area and power at the cost of performance. Gunnar suggested adding 8 byte instruction support to ColdFire was worthwhile even in a FPGA core with inefficient routing.

https://community.nxp.com/t5/ColdFire-68K-Microcontrollers/Coldfire-compatible-FPGA-core-with-ISA-enhancement-Brainstorming/td-p/238714 Quote:

Hello,

I work as chip developer.

While creating a super scalar Coldfire ISA-C compatible FPGA Core implementation,

I've noticed some possible "Enhancements" of the ISA.

I would like to hear your feedback about their usefulness in your opinion.

Many thanks in advance.

1) Support for BYTE and WORD instructions.

I've noticed that re-adding the support for the Byte and Word modes to the Coldfire comes relative cheap.

The cost in the FPGA for having "Byte, Word, Longword" for arithmetic and logic instructions like

ADD, SUB, CMP, OR, AND, EOR, ADDI, SUBI, CMPI, ORI, ANDI, EORI - showed up to be neglect-able.

Both the FPGA size increase as also the impact on the clockrate was insignificant.

2) Support for more/all EA-Modes in all instructions

In the current Coldfire ISA the instruction length is limited to 6 Byte, therefore some instructions have EA mode limitations.

E.g the EA-Modes available in the immediate instruction are limited.

That currently instructions can either by 2,4, or 6 Byte length - reduces the complexity of the Instruction Fetch Buffer logic.

The complexity of this unit increases as more options the CPU supports - therefore not supporting a range from 2 to over 20 like 68K - does reduce chip complicity.

Nevertheless in my tests it showed that adding support for 8 Byte encoding came for relative low cost.

With support of 8 Byte instruction length, the FPU instruction now can use all normal EA-modes - which makes them a lot more versatile.

MOVE instruction become a lot more versatile and also the Immediate Instruction could also now operate on memory in a lot more flexible ways.

While with 10 Byte instructions length support - there are then no EA mode limitations from the users perspective - in our core it showed that 10 byte support start to impact clockrate - with 10 Byte support enabled we did not reach anymore the 200 MHz clockrate in Cyclone FPGA that the core reached before.

I'm interested in your opinion of the usefulness of having Byte/Word support of Arithmetic and logic operations for the Coldfire.

Do you think that re-adding them would improve code density or the possibility to operate with byte data?

I would also like to know if you think that re-enabling the EA-modes in all instruction would improve the versatility of the Core.

Many thanks in advance.

Gunnar

|

This was the first time Gunnar supported the ColdFire ISA-C instructions that he dropped and then added back. Option #2 would allow EA addressing modes (xxx).L, #d32.s, (d8,An,Xi*SF) and (bd=0,An,XI*SF), the first two addressing modes being common and the last two useful for arrays and large programs. Supporting half precision fp immediates could have reduced the instruction sizes for the 68060 and ColdFire too and many fp immediates could be compressed into this format using the VASM peephole optimization. Nobody cares about good 68k or ColdFire development anymore though as the ColdFire suggestion was long after Motorola/Freescale/NXP was more worried about keeping the 68k and ColdFire scaled below PPC and this support will never be added to 68k virtual machines anymore than SMP support. You can only be sabotaged so much before giving up.

Hammer Quote:

Quake FDIV uses FP32. Quake 2's 3DNow path used FP32.

|

The default rounding precision for the Visual C++ 2.0 compiler was double precision.

https://www.phatcode.net/res/224/files/html/ch63/63-02.html#Heading7 Quote:

FDIV is a painfully slow instruction, taking 39 cycles at full precision and 33 cycles at double precision, which is the default precision for Visual C++ 2.0. While FDIV executes, the FPU is occupied, and can’t process subsequent FP instructions until FDIV finishes. However, during the cycles while FDIV is executing (with the exception of the one cycle during which FDIV starts), the integer unit can simultaneously execute instructions other than IMUL. (IMUL uses the FPU, and can only overlap with FDIV for a few cycles.) Since the integer unit can execute two instructions per cycle, this means it’s possible to have three instructions, an FDIV and two integer instructions, executing at the same time. That’s exactly what happens, for example, during the second cycle of this code:

|

Changing the default rounding precision requires an expensive 8 cycle latency FLDCW instruction and Michael Abrash warns about the dangers of leaving single precision rounding as the default.

https://www.phatcode.net/res/224/files/html/ch63/63-04.html#Heading10 Quote:

Projection

The final optimization we’ll look at is projection to screenspace. Projection itself is basically nothing more than a divide (to get 1/z), followed by two multiplies (to get x/z and y/z), so there wouldn’t seem to be much in the way of FP optimization possibilities there. However, remember that although FDIV has a latency of up to 39 cycles, it can overlap with integer instructions for all but one of those cycles. That means that if we can find enough independent integer work to do before we need the 1/z result, we can effectively reduce the cost of the FDIV to one cycle. Projection by itself doesn’t offer much with which to overlap, but other work such as clamping, window-relative adjustments, or 2-D clipping could be interleaved with the FDIV for the next point.

Another dramatic speed-up is possible by setting the precision of the FPU down to single precision via FLDCW, thereby cutting the time FDIV takes to a mere 19 cycles. I don’t have the space to discuss reduced precision in detail in this book, but be aware that along with potentially greater performance, it carries certain risks, as well. The reduced precision, which affects FADD, FSUB, FMUL, FDIV, and FSQRT, can cause subtle differences from the results you’d get using compiler defaults. If you use reduced precision, you should be on the alert for precision-related problems, such as clipped values that vary more than you’d expect from the precise clip point, or the need for using larger epsilons in comparisons for point-on-plane tests.

Rounding Control

Another useful area that I can note only in passing here is that of leaving the FPU in a particular rounding mode while performing bulk operations of some sort. For example, conversion to int via the FIST instruction requires that the FPU be in chop mode. Unfortunately, the FLDCW instruction must be used to get the FPU into and out of chop mode, and each FLDCW takes 7 cycles, meaning that compilers often take at least 14 cycles for each float->int conversion. In assembly, you can just set the rounding state (or, likewise, the precision, for faster FDIVs) once at the start of the loop, and save all those FLDCW cycles each time through the loop. This is even more true for ceil(), which many compilers implement as horrendously inefficient subroutines, even though there are rounding modes for both ceil() and floor(). Again, though, be aware that results of FP calculations will be subtly different from compiler default behavior while chop, ceil, or floor mode is in effect.

A final note: There are some speed-ups to be had by manipulating FP variables with integer instructions. Check out Chris Hecker’s column in the February/March 1996 issue of Game Developer for details.

|

You know Quake used single precision FDIV though?

The 68k FPU offers per instruction rounding without changing the default rounding mode and has since the 68881 with FSGLDIV (single precision divide) and the 68040 introduced FSop and FDop instructions which also specify the rounding precision in the instruction. The 68060 FPU also adds back the FINTRZ instruction which specifies the default C compiled chop (round to zero) rounding mode that was incompetently left out of the 68040 FPU. I suggested a minor logic change so the other 3 rounding modes could be supported as FINTRN (round to nearest) like C round(), FINTRM (round to minus infinity) like C floor() and FINTRP (round to plus infinity) like C ceil(). Michael Abrash used the less common rounding modes in Quake but Gunnar knew they were not used enough to add to the 68k FPU ISA being more of an FPU expert than you even. I know I'm a nobody who knows nothing about anything as I was just a small part of improving VBCC to have over 3 times the fp performance of GCC 3.3 on the 68060.

Hammer Quote:

FALSE, Pentium's zero cycle FXCH effectively makes X87 registers into 8 x87 registers.

|

A zero cycle FXCH is not free. Not only can the Pentium not execute an integer instruction in parallel like the 68060 but code density is reduced.

Karlos Quote:

Try it. Assign 1.0/c.w to a temporary and then multiply each component by it. I would expect a good compiler to spot this but it may not optimise it because there are potential side effects to care about for strictness where c.w is very large. For most practical transformations, that wouldn't be the case.

This just looks like code written for clarity, rather than performance and the assumption the compiler will just magically fix it.

|

The invert and multiply C optimization you suggest a good compiler should make is likely not possible by default. The problem is that precision may be lost and some hardware may have differences when switching from divide to multiply while some backends and support code like for the 68k are barely supported at all in GCC. GCC has an option for this optimization though.

https://gcc.gnu.org/onlinedocs/gcc/Optimize-Options.html Quote:

-freciprocal-math

Allow the reciprocal of a value to be used instead of dividing by the value if this enables optimizations. For example x / y can be replaced with x * (1/y), which is useful if (1/y) is subject to common subexpression elimination. Note that this loses precision and increases the number of flops operating on the value.

The default is -fno-reciprocal-math.

|

The extended precision 68k FPU could make maintaining double precision as most software expects very close if not perfect although GCC prefers to discard the intermediate precision in my experience with FPU instructions like FSop and FDop or changing the default rounding mode to double precision. I would not be surprise if this optimization option does not work at all for 68k targets due to the poor support for the 68k, especially for the 68k FPU.

Precision is not always lost when using the invert and multiply trick with an immediate denominator/divisor. The VASM invert and multiply peephole optimization is only used when the result and FPCC are the exact same as using the FDIV which is not uncommon. What Frank calls powers of two fp numbers, not only allow the invert and multiply optimization but commonly compression to a smaller fp datatype size which is why the optimization performs both the invert and multiply trick and the compression of fp immediates. I explained both potential optimizations to Frank and he saw the similarities in both and figured out how to combine them. It was one of those experiments that works out better than expected. Just to give an idea of how successful the compression can be, I compiled VBCC with VBCC and every single fp immediate was compressed to single precision despite double precision being the default minimum in C. I did not check for instances of the invert and multiply peephole optimization. Like I said earlier, it would be possible to add a compiler option like -freciprocal-math or -ffast-math as the more general way to turn on such optimizations, which could be passed down to VASM to perform the optimization where precision is lost. There would be a limitation of only immediates for the optimization while the VBCC compiler itself would have to get much more sophisticated to perform the invert and multiply trick without immediates. VBCC does not even perform the invert and multiply trick for integers even though I wrote a very flexible and optimized for C DivMagic code I hoped would be included.

https://eab.abime.net/showthread.php?t=82710&highlight=DivMagic

After Gunnar lied to his goon squad cult causing them to attack me on EAB resulting in me being permanently banned, I retired from Amiga development. There is no need for development here with emulation. VBCC and VASM should just remove all the optimizations to increase compile speed since the optimizations are worthless now with emulation. A 5GHz CPU core has 100 cycles for every 1 cycle on the 68060@50MHz so a real division will be faster than any 68060 division optimization. Optimizing for museum pieces is a waste of time.

Karlos Quote:

In this example, you can trade 3 divisions for 1 division and 3 multiplications. Division is typically an order of magnitude slower than multiplication on most FPU. Some FPU might even have a reciprocal operation (1/x) that's quicker than a regular general purpose division (not seen an example, but it's the sort of thing that could conceivably exist).

|

Some of the reciprocal instructions give a low precision reciprocal estimate like the POWER/PPC FRES instruction.

https://www.ibm.com/docs/en/aix/7.2?topic=set-fres-floating-reciprocal-estimate-single-instruction

It is barely usable by a compiler as is. A double precision version would have been more useful but does not save much over loading a 1 in a FPU register and dividing.

|

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 5:35:25

| | [ #144 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4960

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @Hammer

Why don't you read the actual source code: https://github.com/id-Software/Quake/blob/master/WinQuake/d_draw16.s

Perspective correction is performed every 16th pixel with linear interpolation to *avoid* having to call fdiv too often. Moreover, the one fdiv call is set amongst code that can execute around it, hiding the latency it adds. More importantly, look at how many times values are multiplied by values based on the reciprocals calculated by division (illustrating my earlier point about reciprocal multiplication).

Ideally this is a case where you'd want to perform the calculation for every pixel for the best visual quality, which would require a division per pixel.

This entire source exists and was optimised accordingly because that's just too expensive. And it's too expensive because it depends on floating point division.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 9:17:31

| | [ #145 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @Karlos

https://www.gamers.org/dEngine/quake/papers/checker_texmap.html

Michael Abrash stated that screen gradients for texture coordinates and 1/z are used to calculate perspective correct texture coordinates every 8 or 16 pixels, and linear interpolation is used between those points

My argument position doesn't change since FDIV is used at a certain interval after a certain number of pixel processing. Faking it via interpolation between FDIV usage is part of the visual trickery. Last edited by Hammer on 06-Mar-2025 at 09:22 AM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 9:39:17

| | [ #146 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4960

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @bhabbott

Quote:

Karlos says "fdiv is a pretty lousy argument point because almost anyone writing performant code will be avoiding it as much as humanly possible.". But is that actually happening? I'm no expert on 3D rendering, but I looked at the source code for OpenLara and in the 'transform' routine it had this:-

c.x /= c.w;

c.y /= c.w;

c.z /= c.w;

Is this one of those cases where divide can't be avoided, or the compiler converts it to multiply, or is it just crappy code? If I was porting this code I wouldn't change anything that I didn't understand, which would be 99% of it. And even then it probably wouldn't work. I guess we need a coding expert like Karlos to do the job. |

So, I did a thing:

typedef struct {

float x, y, z, w;

} Vec4f;

void divideByW(Vec4f* v) {

v->x /= v->w;

v->y /= v->w;

v->z /= v->w;

}

void multiplyByInverseW(Vec4f* v) {

float invW = 1.0f/v->w;

v->x *= invW;

v->y *= invW;

v->z *= invW;

}

From a strictly mathematical sense, both functions are equivalent. However, from a strictly IEEE-754 behavioural perspective, there will be differences in edge cases were w is very large or very small.

Compiled this as:

m68k-amigaos-gcc -fomit-frame-pointer -O3 -m68060 -mtune=68060 fdiv.c -S -o fdiv.s

Gives:

#NO_APP

.text

.align 2

.globl _divideByW

_divideByW:

move.l (4,sp),a0

move.l (12,a0),d0

fsmove.s (a0),fp0

fsdiv.s d0,fp0

fmove.s fp0,(a0)+

fsmove.s (a0),fp0

fsdiv.s d0,fp0

fmove.s fp0,(a0)+

fsmove.s (a0),fp0

fsdiv.s d0,fp0

fmove.s fp0,(a0)

rts

.align 2

.globl _multiplyByInverseW

_multiplyByInverseW:

fmove.w #1,fp0

move.l (4,sp),a0

fsdiv.s (12,a0),fp0

fsmove.s (a0),fp1

fsmul.x fp0,fp1

fmove.s fp1,(a0)+

fsmove.s (a0),fp1

fsmul.x fp0,fp1

fmove.s fp1,(a0)+

fsmul.s (a0),fp0

fmove.s fp0,(a0)

rts

In practise, for all sensible member values of the tuple, the two functions are equivalent but there's no way for the compiler to know that you aren't going to be dealing with things like denormalised values or other edge cases where the reciprocal could lose precision over direct division. Consequently it plays it safe, even at -O3.

I haven't benchmarked this, but I would feel comfortable in predicting the latter is faster and numerically congruent for any values that aren't already floating point edge cases._________________

Doing stupid things for fun... |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 10:30:09

| | [ #147 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @matthey

Quote:

The pipelined Pentium FPU can only issue a FMUL instruction every other cycle and FXCH instructions are required to avoid FPU stack register dependencies. Performing FXCH in parallel with other FPU instructions instead of executing an integer instruction in parallel like the 68060 and Cyrix 6x86 was the only way to make FPU pipelining worthwhile with the horrible x86 FPU ISA.

(SNIP)

|

Your narrative can't overcome Quake benchmark results.

X86 FPU ISA is so horrible that AMD made the K7 Athlon into FPU beast.

Quote:

The pipelined Pentium FPU can only issue a FMUL instruction every other cycle and FXCH instructions are required to avoid FPU stack register dependencies. Performing FXCH in parallel with other FPU instructions instead of executing an integer instruction in parallel like the 68060 and Cyrix 6x86 was the only way to make FPU pipelining worthwhile with the horrible x86 FPU ISA.

|

Pentium FPU Pipeline supports FADD, FMUL and FSUB.

Pentium FPU's port feeds into three FPU sub-units

ADD

MUL

DIV

Each sub-unit has its latencies. FDIV blocks new FP instructions, not other in-flight FP instructions.

FXCH is just renamed function, not a move function, hence it's a zero cycle, but it consumes an instruction issue slot. Pentium doesn't have a major problem with fetching from L1 instruction cache. Pentium has a prefetch buffer before the dual instruction decode.

Other FPUs from the RISC world have greater than 8 FPR, hence pushing X86 into register renaming scheme, such as in the Intel Pentium Pro.

Quote:

Are you comparing best case, common case or worst case latencies? The best case and common case latency for both the 68060 and Pentium FMUL and FADD is 3 cycles.

The worst case latency is much worse than 5 cycles for both which includes a load from memory. The 68060 latencies increase with some addressing modes that make the instruction larger than 6 bytes which is all that the ColdFire supports including the ColdFire FPU.

|

Your narrative can't overcome Quake benchmark results.

68060's superscalar design wasn't robust enough with TheForceEngine port.

Quote:

The 68k base FPU instructions are mostly 4 bytes so the addressing modes that keep the instruction 6 bytes or less are (An), -(An), (An)+ and (d16,An) which are the most common ones but limiting, especially no fp immediates are allowed which are better in the more predictable code than loading as data. The limitation has nothing to do with the 4B/cycle instruction fetch but about how instructions are stored in the instruction buffer in order to minimize the buffer SRAM and perhaps dispatcher logic limitations. The design choice reduces core area and power at the cost of performance. Gunnar suggested adding 8 byte instruction support to ColdFire was worthwhile even in a FPGA core with inefficient routing.

|

When running AmiQuake, AC68080 only uses the dual ALU/AGU and a FPU.

Both Vampire V2 and Warp1260 have RTG with semi-modern memory modules.

Unlike the 68060, AC68080's FPU is not attached to the integer pipeline due to a quad instruction issue front end.

AC68080's AmiQuake is faster.

Quote:

This was the first time Gunnar supported the ColdFire ISA-C instructions that he dropped and then added back.

(SNIP)

|

Gunnar can do as he pleases. Gunnar can flip flop with his projects as he chases new customers. Money doesn't grow on trees.

Quote:

The default rounding precision for the Visual C++ 2.0 compiler was double precision.

|

https://www.quakewiki.net/quakesrc/114.html

To compile the quake source code for Win95+ you should have Microsoft Visual C++ v6.0. v5.0 might work too but I can't guarantee it.

For Visual C++,

float for FP32,

double for FP64,

I'd rather use the quickest instruction for the job, and how much I can get away with "undetectable" visual cheats.

For Quake III, https://breq.dev/2021/03/17/5F3759DF

It's up to 32 bits i.e. long (INT32) and floats (FP32).

Quote:

After Gunnar lied to his goon squad cult causing them to attack me on EAB resulting in me being permanently banned, I retired from Amiga development. There is no need for development here with emulation. VBCC and VASM should just remove all the optimizations to increase compile speed since the optimizations are worthless now with emulation. A 5GHz CPU core has 100 cycles for every 1 cycle on the 68060@50MHz so a real division will be faster than any 68060 division optimization. Optimizing for museum pieces is a waste of time.

|

68060 doesn't have a loving parent while MIPS64 has MIPS Inc, and upgrading it. It's difficult for 68K uarch evolution without a proper corporate backer. When I looked at a modernized multi-core MIPS64 R6 SoC with deep learning extensions, that could be 68K in an alternate timeline.

Note that 68060 evolution like AC68080 may render certain software optimizations for the 68060 redundant. AC68080's SysInfo benchmark delivers a proper super scalar 68k with two integer pipelines, 68060's two 2 byte optimizations are not required for AC68080.

NXP/Freescale's PowerPC e200's compressed PPC ISA effectively killed 68K/ColdFire.

Freescale didn't split off 68K/ColdFire into a separate corporate entity that focused on 68K. Freescale is a PPC vampire sucked 68K's embedded market's revenues until they switch to another CPU family.

At least IBM is loyal to PPC. Last edited by Hammer on 06-Mar-2025 at 01:08 PM.

Last edited by Hammer on 06-Mar-2025 at 12:59 PM.

Last edited by Hammer on 06-Mar-2025 at 12:31 PM.

Last edited by Hammer on 06-Mar-2025 at 11:57 AM.

Last edited by Hammer on 06-Mar-2025 at 11:31 AM.

Last edited by Hammer on 06-Mar-2025 at 11:28 AM.

Last edited by Hammer on 06-Mar-2025 at 11:20 AM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 10:51:56

| | [ #148 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4960

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @Hammer

It's used because the latency of it can be hidden by integer operations that come straight afterwards. And even then it's used as minimally as possible. You can see it in the actual code.

Abrash was brought in to optimise code that Carmack had written in C, but I don't know that he had the remit to change the algorithm approach. The calculations were based on division, so he optimised it the best way he could within his remit which was to *reduce the amount* of division as far as possible and then hide the cost of the remaining division by carefully arranging the instruction sequence. You can read it right there in the source code.

We have a similar problem in AB3D2, albeit fixed point, applied to floors and vector models primarily. As with quake. the correction involves dividing by various numbers and typically not the same number more than once in succession. However, we have the benefit of not being constrained so we changed it to use a reciprocal lookup that spans the majority percentile of expected divisor inputs. Consequently we were able to use a lookup table of 16384/N which suited our use case and remove many divs.l operations and replace them with a lookup/multiply/shift right approach. In some cases we have to clamp inputs or fall back to division for the smaller range.

These weren't marginal gains. The performance was uplifted significantly on 030, 040 and 060, but especially on 060 since the multiplication step is so much faster than the division it replaced.

Division is poison. You avoid it or minimise it at all costs in performance critical code. Last edited by Karlos on 06-Mar-2025 at 10:53 AM.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 6-Mar-2025 11:50:57

| | [ #149 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @Karlos

Quote:

Abrash was brought in to optimise code that Carmack had written in C, but I don't know that he had the remit to change the algorithm approach. The calculations were based on division, so he optimised it the best way he could within his remit which was to *reduce the amount* of division as far as possible and then hide the cost of the remaining division by carefully arranging the instruction sequence. You can read it right there in the source code.

|

In the end, it's smoke mirrors.

Slower instruction is only used when you have to use it.

In pure C++, a programmer can create a code structure with minimal FDIV usage.

Quote:

We have a similar problem in AB3D2, albeit fixed point, applied to floors and vector models primarily. As with quake. the correction involves dividing by various numbers and typically not the same number more than once in succession.

|

It's unwise to use the slowish instruction in succession. Quake FDIV usage only has a resolution of 8 or 16 pixels with an approximation between two FDIV usage intervals.

Quote:

However, we have the benefit of not being constrained so we changed it to use a reciprocal lookup that spans the majority percentile of expected divisor inputs. Consequently we were able to use a lookup table of 16384/N which suited our use case and remove many divs.l operations and replace them with a lookup/multiply/shift right approach. In some cases we have to clamp inputs or fall back to division for the smaller range.

These weren't marginal gains. The performance was uplifted significantly on 030, 040 and 060, but especially on 060 since the multiplication step is so much faster than the division it replaced.

Division is poison. You avoid it or minimise it at all costs in performance critical code.

|

PocketQuake is designed for FPU-less ARM based Windows CE devices.

https://www.hpcfactor.com/scl/1057/PocketMatrix/Pocket_Quake/version_0.62?page=download

PocketQuake source code download

WinQuake may not be a good source for Amiga's Quake while the WinQuake port for the Amiga is fine on AC68080.

PocketQuake closest relative would be PS1's fixed point Quake. Change major in datatype removes Quake as a benchmark.

Optimized Quake would still have a memory bandwidth bottleneck with a 32-bit bus.

With 32bit 100MHz SDRAM, 68060 @ 100Mhz has memory bandwidth equivalent to Pentium 75's 50MHz 64bit. 32bit 100MHz SDRAM in 1995 to 1996 for the Amiga doesn't exist.

Last edited by Hammer on 06-Mar-2025 at 01:10 PM.

Last edited by Hammer on 06-Mar-2025 at 12:16 PM.

Last edited by Hammer on 06-Mar-2025 at 12:11 PM.

Last edited by Hammer on 06-Mar-2025 at 11:54 AM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  bhabbott bhabbott

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 4:33:58

| | [ #150 ] |

| |

|

Cult Member

|

Joined: 6-Jun-2018

Posts: 554

From: Aotearoa | | |

|

| @Hammer

Quote:

Hammer wrote:

32bit 100MHz SDRAM in 1995 to 1996 for the Amiga doesn't exist.

|

True. Google's lying AI says that the first Pentium motherboard supporting SDRAM was the VIA Apollo Pro, introduced in May 1998.

But the Cyberstorm 060 had interleaved 64-bit RAM.

Quote:

| WinQuake may not be a good source for Amiga's Quake while the WinQuake port for the Amiga is fine on AC68080. |

That's nice.

But Quake is an old game. This thread is about new games. I reckon a 50MHz 060 could do justice to wide variety of new games on the Amiga. Just don't try to push it too hard and it will be fine.

|

| | Status: Offline |

| |  cdimauro cdimauro

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 13:07:20

| | [ #151 ] |

| |

|

Elite Member

|

Joined: 29-Oct-2012

Posts: 4441

From: Germany | | |

|

| @Karlos

Quote:

Because bots do NOT understand...  |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 21:21:54

| | [ #152 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @cdimauro

Again, https://www.gamers.org/dEngine/quake/papers/checker_texmap.html

Michael Abrash stated that screen gradients for texture coordinates and 1/z are used to calculate perspective correct texture coordinates every 8 or 16 pixels, and linear interpolation is used between those points

Quake's FDIV usage has 8 or 16 pixel skips and my argument position was never per pixel FDIV usage.

Quote:

| ecause bots do NOT understand |

Fuck off Mussolini. You started a personality based flame war. I will return it with interest.Last edited by Hammer on 07-Mar-2025 at 11:05 PM.

Last edited by Hammer on 07-Mar-2025 at 10:28 PM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 21:58:44

| | [ #153 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @bhabbott

Quote:

Google's lying AI says that the first Pentium motherboard supporting SDRAM was the VIA Apollo Pro, introduced in May 1998.

But the Cyberstorm 060 had interleaved 64-bit RAM.

|

68060 has an external 32-bit bus and Cyberstorm 060 comes at a 50 MHz clock speed.

interleaving memory helps with lower latency.

The P54C competition has 256 KB or 512 KB L2 cache motherboards.

https://www.anandtech.com/show/60

PC10 SDRAM: An Introduction

Date: March 3, 1998

Intel 440BX was released in April 1998 for Pentium II Deschutes.

The reason why I purchased 68060 rev1 is to investigate the 1995-1996 variant as "what if" alternate timeline against my 1996 era Pentium 150 Mhz based PC which I overclocked to 166 Mhz with 66 Mhz FSB jumper.

I estimated 68060-50 was suboptimal with Quake before Clickboom released Quake for the Amiga in 1998, hence I didn't upgrade my A3000 with Cyberstorm 060 and CyberGraphics 64 (S3 Trio 64V) in 1996.

Pentium II was released in May 1997.

Legendary budget Celeron 300A was released in Aug 1998, and this is what I purchased in Xmas Q4 1998 and overclocked to 450 Mhz with 100 MHz FSB.

https://www.philscomputerlab.com/celeron-300a-oc.html

With TF1260, I overclocked 68060 rev 1 from 62.5 to 74 Mhz range. 62.5 Mhz is stable. TF1260's bundled 68LC060 rev 4 is stable around 74MHz. 8 stage pipeline advantage for higher clock speed doesn't seem to materialize when there are factors for attainable clock speed beside the pipeline stage count.

I purchased TF1260 against people like Matt. Matt is in dreamland.

68060's situation is a product by Motorola and the blame is fully on Motorola's leadership.

Amiga's primary value added is with the custom chips, not with the off-the-shelf 68K CPU. There are other 68K desktop computers.

I'm a gamer 1st, hence Amiga and then gaming PCs are selected over Macs.

Last edited by Hammer on 07-Mar-2025 at 10:38 PM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 22:30:35

| | [ #154 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6505

From: Australia | | |

|

| @Karlos

Quote:

| Division is poison. You avoid it or minimise it at all costs in performance critical code. |

My argument position was never per pixel FDIV usage since Quake did not implement per pixel FDIV usage.Last edited by Hammer on 07-Mar-2025 at 10:32 PM.

_________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 7-Mar-2025 23:59:35

| | [ #155 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4960

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @Hammer

I didn't say it was. I said that fdiv is a pretty stupid metric to base system performance in general on as most code will avoid division if it's optimised for performance. Division, whether integer or floating point, is still the slowest of the regular arithmetic operations even today, let alone in the mid 90's. You use division when you need exact/precise results.

Quake uses it for perspective correction, but it's not strictly necessary: if the divisor range can be normalised to some fixed range that can then be expressed as an integer, that integer can then be used to select a reciprocal from a lookup that is then used as a multiplier instead. Abrash didn't do this because he was able to rearrange the code manually to mask the fdiv latency behind a whole block of other integer instructions. Plus the lookup might end up being too large, depending on the necessary range.

I'm sure a similar approach could be implemented for the 68K as the FPU can calculate the division while the IU is busy doing something else too. However, do any of the Amiga ports have an equivalently hand optimised version of the draw16 code or are they just using the vanilla C?

There were numerous other bits of Quake optimised by Abrash for x86 too that probably lack equivalent attention for 68K.

Comparing release Quake on the Pentium and 68060 is oranges and apples until you are dealing with ports having equivalent optimisation effort.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  matthey matthey

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 8-Mar-2025 0:54:10

| | [ #156 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2754

From: Kansas | | |

|

| Hammer Quote:

Your narrative can't overcome Quake benchmark results.

X86 FPU ISA is so horrible that AMD made the K7 Athlon into FPU beast.

|

Let us start with what Michael Abrash says about x86 assembly language in "Michael Abrash's Graphics Programming Black Book Special Edition".

https://www.phatcode.net/res/224/files/html/ch02/02-01.html#Heading1 Quote:

Assembly Is Fundamentally Different

Is it really so hard as all that to write good assembly code for the PC? Yes! Thanks to the decidedly quirky nature of the x86 family CPUs, assembly language differs fundamentally from other languages, and is undeniably harder to work with. On the other hand, the potential of assembly code is much greater than that of other languages, as well.

|

and

https://www.phatcode.net/res/224/files/html/ch63/63-02.html#Heading6 Quote:

FXCH

One piece of the puzzle is still missing. Clearly, to get maximum throughput, we need to interleave FP instructions, such that at any one time ideally three instructions are in the pipeline at once. Further, these instructions must not depend on one another for operands. But ST(0) must always be one of the operands; worse, FLD can only push into ST(0), and FST can only store from ST(0). How, then, can we keep three independent instructions going?

The easy answer would be for Intel to change the FP registers from a stack to a set of independent registers. Since they couldn’t do that, thanks to compatibility issues, they did the next best thing: They made the FXCH instruction, which swaps ST(0) and any other FP register, virtually free. In general, if FXCH is both preceded and followed by FP instructions, then it takes no cycles to execute. (Application Note 500, “Optimizations for Intel’s 32-bit Processors,” February 1994, available from http://www.intel.com, describes all .the conditions under which FXCH is free.) This allows you to move the target of a pending operation from ST(0) to another register, at the same time bringing another register into ST(0) where it can be used, all at no cost. So, for example, we can start three multiplications, then use FXCH to swap back to start adding the results of the first two multiplications, without incurring any stalls, as shown in Listing 63.1.

|

The Pentium FXCH trick is a kludge workaround that makes a pipelined FPU worthwhile but it is still far from optimal. Linus Torvalds also talks about the x87 FPU deficiencies (see link for full x87 FPU issues).

https://www.realworldtech.com/forum/?threadid=67661&curpostid=67663 Quote:

- the stack is a horribly horribly bad idea. It looks simple when you use it from assembly for trivial things, but there's a reason we don't use a stack register setup for integer registers, and all the same issues are true for floating point.

Getting compilers to generate sane code is hard, and doing register renaming in hw is just more complicated with a dynamic stack top. And the stack is completely unusable across a function call, since it's limited and doesn't have any graceful overflow behaviour, so you need to either flush all fp registers over any function call, or you need to limit your already limited FP stack register space even more.

|

Linus calls the x87 stack registers "a horribly horribly bad idea" while I called the whole x87 FPU ISA "horrible". Maybe we are both biased because we started on the 68k and have both made comments about the 68k being easier to program?

The Pentium FPU does have some things going for it. It is pipelined and FXCH was shown to be a partially successful kludge workaround for the horrible stack register FPU ISA. The FPU is CISC allowing independent Fload+Fop instructions with no load-to-use latency/stalls which reduces the number of registers needed. The FPU pipeline(s) are shallow and the instruction latencies short which reduces the number of FPU registers needed. Linus also complained about the shortage of x87 FPU registers so FPU register saving features are important.

https://www.realworldtech.com/forum/?threadid=67661&curpostid=67663 Quote:

- too few registers. FP code in particular often likes

more registers.

|

Intel favored abandoning the x87 FPU for scalar operations in the SIMD unit as it moved to deeper and deeper pipelines for higher clock speeds while AMD had more practical pipeline depths that were slowly growing deeper too. Deeper FPU pipelines make the register shortage worse although x86 superpipelining popularity faded after the Pentium 4 and more practical pipelining with lower instruction latencies returned as there are fewer bubbles/stalls, fewer registers needed and smaller code which is true for RISC cores too.

The P5 Pentium has a 5-stage integer pipeline and a 3-stage FMUL pipeline. The P6 Pentium has a 14-stage integer pipeline and 5-stage FMUL pipeline. The result FPU register of each pipelined FMUL instruction that is in flight can not be touched for the latency of the instruction. Even using CISC Fload+Fop instructions, the results require as many FPU registers as FMUL stages, which with a 5-stage FMUL pipeline is most of them. Also, code optimized for the P5 Pentium like Quake, may stall in the P6 Pentium FPU. The Pentium 4 with 31-cycle integer pipeline has a 7-cycle FMUL pipeline and practically can not gain all of the advantage of FMUL pipelining due to having only 8 FPU registers. RISC FPUs have to not only avoid touching the results of pipelined instructions in flight but commonly also have to avoid touching the results of load/store loads for the load-to-use latency. CISC FPUs save many FPU registers, especially if the pipelining is not too deep. It is not just high clocked CPU cores that require deep pipelining though, FPGA CPU cores do also. Gunnar rejected my proposal for 16 FPU registers for his FPGA 68k ISA as not enough registers.

Hammer Quote:

Your narrative can't overcome Quake benchmark results.

68060's superscalar design wasn't robust enough with TheForceEngine port.

|

Was TheForceEngine port compiled with GCC? Does GCC have known poor support for the 68060 and 68k FPU? You know 68k support was almost dropped altogether from GCC too? Is this a 68060 problem or a GCC problem?

Hammer Quote:

Unlike the 68060, AC68080's FPU is not attached to the integer pipeline due to a quad instruction issue front end.

|

FPU instructions are multi-cycle on the 68060 giving more time for the instruction buffer to fill. For example, FMUL has a 3 cycle execution latency and 12 bytes can be fetched in that time while the base FMUL instruction is 4 bytes in length. The 68060 instruction fetch is usually adequate for FPU heavy code without FPU pipelining. Mixed integer and FPU code is tighter but usually a few cycles are lost hear and there allowing the decoupled fetch to catch back up.

The AC68080 has a pipelined FPU advantage but most existing and compiler generated 68k FPU code will not gain much performance from it. Instruction scheduling and use of the many FPU registers for the deeply pipelined FPU are required. Lower latency FPU instructions boost legacy 68k FPU code the most and shallow FPU pipelining likely would also but the AC68080 likely has moderate to deep FPU pipelining for a higher clock speed in FPGA.

Hammer Quote:

Gunnar can do as he pleases. Gunnar can flip flop with his projects as he chases new customers. Money doesn't grow on trees.

|

What "customers" has Gunnar caught? Which Amiga accelerators or embedded customers are using Gunnar's AC68080 precious?

Hammer Quote:

Visual C++ 2.0 compiler is what Quake used from "Michael Abrash's Graphics Programming Black Book Special Edition".

Hammer Quote:

For Visual C++,

float for FP32,

double for FP64,

|

I said the "default rounding mode" in the compiler. You obviously do not understand how the 68k and x86 FPUs work. I'll explain with the 68k FPU since it is simpler.

fadd.s (8,a0),fp0

This fadd.s instruction loads the single precision number from memory, converts it to extended precision, adds it to fp0 and then rounds the result using the precision and rounding mode in the FPCR. As I recall, the default rounding precision in the FPCR is round to extended precision and the default rounding mode is round to nearest. Some compilers change these global FPU defaults like setting the precision to double precision for better IEEE consistency at the cost of decreased intermediate calculation precision. I suspect Visual C++ defaults to double precision rounding as there is no other way I am aware of to accomplish this. The 68040 and 68060 added new instructions to select the rounding precision of each FPU instruction.

fdadd.s (8,a0),fp0

This instruction is similar to the instruction above but rounds the result to double precision regardless of the FPCR rounding mode. GCC uses these instructions for 68040 and 68060 code but the executables crash on a 6888x machine. VBCC does not use these instructions choosing the better intermediate extended precision and backward compatibility instead of the increased consistency. An ABI that passes FP arguments in registers would retain more intermediate precision but there is no motivation for improvements when the 68k is EOL. It is expensive to change the 68k FPCR which has an 8 cycle latency on the 68060 and x87 CW which has an 8 cycle latency on the Pentium. This is why it is useful to have instructions that specify the precision and/or rounding mode in the encoding where the 68k has a large advantage and it could be larger with the minor change of introduction of new rounding modes for FINTxx.

Hammer Quote:

NXP/Freescale's PowerPC e200's compressed PPC ISA effectively killed 68K/ColdFire.

|

IBM PPC 405 introduced CodePack compression in 1999 but it was complex and did not compress the code in caches unlike Thumb or MIPS-16. Freescale/NXP was late to the game with PPC VLE, incompatible with CodePack, incompatible with existing PPC code being a reencoded PPC ISA, was only available on a few embedded cores and was poorly supported by compilers but finally delivered a cache savings. IBM selling their PPC embedded designs to AMCC in 2004 and Apple announcing the transition from PPC to x86 in 2005 were not good timing for e200 cores either. The embedded market niche where PPC saw some success was the high end automotive and telecommunication embedded markets where ARM did not have the performance to scale and where neither CodePack or VLE were used. The 68k was killed by Motorola so as not to compete with PPC and ColdFire died because it was not compatible enough with 68k and was less scalable after it had been castrated.

Last edited by matthey on 08-Mar-2025 at 01:13 AM.

|

| | Status: Offline |

| |  cdimauro cdimauro

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 8-Mar-2025 6:38:05

| | [ #157 ] |

| |

|

Elite Member

|

Joined: 29-Oct-2012

Posts: 4441

From: Germany | | |

|

| @Hammer

Quote:

Hammer wrote:

@cdimauro

Again, https://www.gamers.org/dEngine/quake/papers/checker_texmap.html

Michael Abrash stated that screen gradients for texture coordinates and 1/z are used to calculate perspective correct texture coordinates every 8 or 16 pixels, and linear interpolation is used between those points

Quake's FDIV usage has 8 or 16 pixel skips and my argument position was never per pixel FDIV usage. |

You still don't get it and continue to grab and report things related to the topic, but without understanding the real topic.

Karlos has just written another comment trying again to clarify it and let you know, but I doubt that you'll ever learn it.

Learn something that, BTW, was quite obvious and heavily used at the time ("the time" -> when people were trying to squeeze the most from the limited available resources).

But this is something which is obvious only to people which had their hands on this stuff. So, nothing that seems to you've made in your life (despite you've reported being a developer. But working on completely different areas, likely).

Quote:

Quote:

| ecause bots do NOT understand |

Fuck off Mussolini. |

As I've just said on comment from an another thread, I fully agree!

Quote:

| You started a personality based flame war. |

Again, you're wrong. I haven't started any personal war with you. In fact, it's since years that I call you bot. So, nothing which started right now.

And nothing like a war, since I was/am simply certifying the reality: you act exactly like a bot!

Bots don't (really) understand conversions. Haven't a real clue of what a discussion is talking about. Haven't real knowledge about the topic. Haven't memory and ability to recall and report correct information about the topic.

That's exactly how you behave when talking of things where you clearly have no clue and/or experience.

To give another example, you still spread false information about the infamous 128 vs 256 bits AVX "versions". Not like in the past, but sometimes to you're still trying to vehicle this big lie despite I've proved in all manners that AVX is inherently a 256-bit ISA extension of x86 since day zero.

So, if someone like me calls you bot, it's only because there are good foundations for it.

Quote:

| I will return it with interest. |

Add yourself to the queue, because at the top are already Trump and Vance for mafia methods. |

| | Status: Offline |

| |  cdimauro cdimauro

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 8-Mar-2025 6:59:42

| | [ #158 ] |

| |

|

Elite Member

|

Joined: 29-Oct-2012

Posts: 4441

From: Germany | | |

|

| @matthey

Quote:

matthey wrote:

Gunnar rejected my proposal for 16 FPU registers for his FPGA 68k ISA as not enough registers. |

Because they were not enough: now you've 32 FPU registers (albeit mixed with data and SIMD registers).

That's because CISCs need much more registers compared to RISCs.

Quote:

Visual C++ 2.0 compiler is what Quake used from "Michael Abrash's Graphics Programming Black Book Special Edition".

Hammer Quote:

For Visual C++,

float for FP32,

double for FP64,

|

I said the "default rounding mode" in the compiler. You obviously do not understand how the 68k and x86 FPUs work. |

Welcome to the "club"...

Quote:

I'll explain with the 68k FPU since it is simpler.

fadd.s (8,a0),fp0

This fadd.s instruction loads the single precision number from memory, converts it to extended precision, adds it to fp0 and then rounds the result using the precision and rounding mode in the FPCR. As I recall, the default rounding precision in the FPCR is round to extended precision and the default rounding mode is round to nearest. Some compilers change these global FPU defaults like setting the precision to double precision for better IEEE consistency at the cost of decreased intermediate calculation precision. |

Indeed, and it's a nasty thing.

Recently I had a discussion with Mitch about some expects of floating pointing instructions on his My 66000 architecture.

Those FPU instructions are able to use single or double precision values, according to a flag in the opcode which vehicles this information. Since the ISA is fully 64 bit, it means that if one of operand is a single, then it'll be converted to double before the physical instruction execution.

But this means that this conversions can be performed even billions of times if such instruction is in a loop. So, I've suggested to introduce a new load instruction for single precision data, in order to avoid such conversion (e.g.: you load the value and convert it to double just one time, before entering the loop, and you're done).

However, he reported that this conversion could cause problems with the IEEE standard, as you've also reported here. I extract an excerpt here:

[...]ran into F32 codes that do not produce (IIRC) expected results using K&R C FP rules (F32 promotes to F64*).

[...]x87 80-bit promotion is now seen to be "bad in the eyes of IEEE 754" in terms of the surprise coefficient.

I haven't further asked clarifications about it, to don't waste Mitch's time, but I'm still curious to know why. Because what I've found is only this part in the ANSI C book (2nd edition) which might be relevant:

When a double is converted to float, whether the value is rounded or truncated is implementation dependent.

The reason why I'm interested to know is because my new architecture (which is not NEx64T) works like x87/6888x for scalars: every FP operation is performed to the maximum precision which is dictated from the registers size (e.g.: 64 bit architecture -> double precision. 32-bit architecture -> single precision). So, I automatically convert all FP data to the maximum precision, when I load constants or data from memory.

Quote:

I suspect Visual C++ defaults to double precision rounding as there is no other way I am aware of to accomplish this. The 68040 and 68060 added new instructions to select the rounding precision of each FPU instruction.

fdadd.s (8,a0),fp0

This instruction is similar to the instruction above but rounds the result to double precision regardless of the FPCR rounding mode. GCC uses these instructions for 68040 and 68060 code but the executables crash on a 6888x machine. VBCC does not use these instructions choosing the better intermediate extended precision and backward compatibility instead of the increased consistency. An ABI that passes FP arguments in registers would retain more intermediate precision but there is no motivation for improvements when the 68k is EOL. It is expensive to change the 68k FPCR which has an 8 cycle latency on the 68060 and x87 CW which has an 8 cycle latency on the Pentium. This is why it is useful to have instructions that specify the precision and/or rounding mode in the encoding where the 68k has a large advantage and it could be larger with the minor change of introduction of new rounding modes for FINTxx. |

Exactly. So, either you need a complete set of instructions where you directly the define the precision or rounding mode, or you need a mechanism which allows you to override them on-the-fly.

On my new architecture I've normal instructions which use the rounding mode set in the status register, and longer instructions where it's directly set in the opcode. Problem solved, but costly (2 more bytes).

Quote:

Hammer Quote:

NXP/Freescale's PowerPC e200's compressed PPC ISA effectively killed 68K/ColdFire.

|

IBM PPC 405 introduced CodePack compression in 1999 but it was complex and did not compress the code in caches unlike Thumb or MIPS-16. Freescale/NXP was late to the game with PPC VLE, incompatible with CodePack, incompatible with existing PPC code being a reencoded PPC ISA, was only available on a few embedded cores and was poorly supported by compilers but finally delivered a cache savings. IBM selling their PPC embedded designs to AMCC in 2004 and Apple announcing the transition from PPC to x86 in 2005 were not good timing for e200 cores either. The embedded market niche where PPC saw some success was the high end automotive and telecommunication embedded markets where ARM did not have the performance to scale and where neither CodePack or VLE were used. The 68k was killed by Motorola so as not to compete with PPC and ColdFire died because it was not compatible enough with 68k and was less scalable after it had been castrated. |

Despite this, such PPC and MIPS VLE extensions weren't as good as Thumb-2 and 68k. |

| | Status: Offline |

| |  matthey matthey

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 9-Mar-2025 1:11:17

| | [ #159 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2754

From: Kansas | | |

|

| cdimauro Quote:

Because they were not enough: now you've 32 FPU registers (albeit mixed with data and SIMD registers).

That's because CISCs need much more registers compared to RISCs.

|

The 68k FPU already has 16 registers for the FPU if the 8 data registers are counted with the 8 FPU registers. The data registers can only be used as a source of FPU instructions which does not help with deeply pipelined FPUs which need more independent destination result registers. A new encoding could allow data register destinations but they have a higher cost than FPU registers with 68k FPUs. For example, let us take a look at some instruction costs on the 68060.

fmove fp1,fp0 ; 1 cycle, 4 bytes, pOEP-but-allows-sOEP

fmove.s (a0),fp0 ; 1 cycle, 4 bytes, pOEP-but-allows-sOEP

fmove.s d0,fp0 ; 3 cycles, 4 bytes, pOEP-only

fmove.w d0,fp0 ; 6 cycles, 4 bytes, pOEP-only

fmove.w #1,fp0 ; 4 cycles, 6 bytes, pOEP-only

fmove.s #1,fp0 ; 1 cycle, 8 bytes, pOEP-only

Using data registers, integers and even immediates is not free. It may be possible to make immediates free with larger instruction buffer fixed length instructions with room for the immediates and allowing more than 6 byte instructions to be superscalar executed (the pOEP and sOEP likely work together to provide 12B/cycle of instructions, enough for a double precision immediate, to keep the latency 1 cycle). Integer conversions have an expensive 3 cycle cost which is high perhaps from sharing existing FPU pipelines. Using data registers has a 2 cycle latency which is not too bad except that using cached memory is usually free. It may be possible to reduce or eliminate the 2 cycle data register cost by using a unified integer unit and FPU register file. I suspect that the AC68080 uses a unified register file with 64-bit registers for the integer unit, FPU and SIMD unit. The disadvantages of a unified register file for the 68k ISA may be more register file read and write ports which may not be able to maintain timing at higher clock speeds, an incompatible 64-bit FPU and a SIMD unit with only 64-bit registers. I believe it is common for FPU and SIMD unit register files to be shared but they are often wider than 64-bits which would be bad for integer performance with the integer unit register file unified too. If planning for a FPGA only core with only an incompatible 64-bit 68k FPU and 64-bit SIMD unit forever, then maybe it is an acceptable compromise. PA-RISC and the 88k performed SIMD in the integer unit register files which could have led to problems with upgrading to wider SIMD unit registers. The 88110 added 32 extended precision registers and perhaps could have added split SIMD unit registers as well but compatibility needs to be maintained including performing 32-bit SIMD in the integer register file for the 88k and 32-bit and 64-bit SIMD in the integer register file for PA-RISC. Maybe these architectures could have continued while maintaining compatibility as narrow SIMD support like MMX is baggage for x86-64 too.

cdimauro Quote:

Indeed, and it's a nasty thing.

Recently I had a discussion with Mitch about some expects of floating pointing instructions on his My 66000 architecture.

Those FPU instructions are able to use single or double precision values, according to a flag in the opcode which vehicles this information. Since the ISA is fully 64 bit, it means that if one of operand is a single, then it'll be converted to double before the physical instruction execution.

But this means that this conversions can be performed even billions of times if such instruction is in a loop. So, I've suggested to introduce a new load instruction for single precision data, in order to avoid such conversion (e.g.: you load the value and convert it to double just one time, before entering the loop, and you're done).

However, he reported that this conversion could cause problems with the IEEE standard, as you've also reported here. I extract an excerpt here:

[...]ran into F32 codes that do not produce (IIRC) expected results using K&R C FP rules (F32 promotes to F64*).

[...]x87 80-bit promotion is now seen to be "bad in the eyes of IEEE 754" in terms of the surprise coefficient.

I haven't further asked clarifications about it, to don't waste Mitch's time, but I'm still curious to know why. Because what I've found is only this part in the ANSI C book (2nd edition) which might be relevant:

When a double is converted to float, whether the value is rounded or truncated is implementation dependent.

The reason why I'm interested to know is because my new architecture (which is not NEx64T) works like x87/6888x for scalars: every FP operation is performed to the maximum precision which is dictated from the registers size (e.g.: 64 bit architecture -> double precision. 32-bit architecture -> single precision). So, I automatically convert all FP data to the maximum precision, when I load constants or data from memory.

|

Perhaps the thinking is that the single precision value can be converted to double and still be used for single precision calculations as the precision has been increased? The flaw in such thinking may be a double rounding error if the promoted to double precision number is rounded back to single precision.

https://hal.science/hal-01091186/document Quote:

Double rounding occurs when a floating-point value is first rounded to an intermediate precision before being rounded to a final precision. The result of two such consecutive roundings can differ from the result obtained when directly rounding to the final precision. Double rounding practically happens, for instance, when implementing the IEEE754 binary32 format with an arithmetic unit performing operations only in the larger binary64 format, such as done in the PowerPC or x87 floating-point units. It belongs to the folklore in the floating-point arithmetic community that double rounding is innocuous for the basic arithmetic operations (addition, division, multiplication, and square root) as soon as the final precision is about twice larger than the intermediate one. This paper adresses the formal proof of this fact considering underflow cases and its extension to radices other than two.

|

There is good reason for single precision scalar calculation results to be stored in the lowest single precision area/lane of a FPU/SIMD register as it is more friendly for SIMD use. Consistency with performing the same vector operation in the SIMD lane requires performing the operation at the same precision. It should be possible to load the single precision number and convert the data from single to double precision before the loop starts if the number is only used for double precision calculations, the number in memory has not changed and the rounding method has not changed. The FPU/SIMD combinations have changed the expectation of FPUs and put pressure on the IEEE standards committee. Compiler developers do not complain because it is easier to test consistent results. It was popular and IEEE compliant for FPUs to promote all values to double or extended precision on load, perform all calculations at max precision, retain all variables and function args at max precision and only down convert on stores to memory using the precision given. This means not only do the 68k and x87 FPUs produce inconsistent results with double and single precision as originally intended but for example, the PPC FPU single precision results are inconsistent with a single precision SIMD unit lane. There are other differences between the classic FPU and SIMD FPU that can give different results like underflow/overflow detection (as Linus Torvalds mentions if you read his entire post), denormal/subnormal and NaN handling, flush to zero, exceptions, global precision and rounding settings, etc. The classic FPU was about max precision and features while the SIMD FPU is about performance and now consistency. The best of both worlds would be to have both a classic FPU and SIMD unit and use the benefits of both. There are people who still carefully use the advantages of the extended precision x87 FPU despite it being deprecated, despite the horrible stack based ISA and despite Intel bugs, and not just the Pentium FDIV bug.

https://randomascii.wordpress.com/2014/10/09/intel-underestimates-error-bounds-by-1-3-quintillion/

The x87 FPU gave extended precision FPUs a bad reputation like x86 gave CISC a bad reputation but the 68k extended precision FPU ISA is much better other than Motorola castration of instructions. Many of the advantages of extended precision FPUs have been forgotten except by scientists and engineers who need the extra precision.

o extended precision is designed to retain full double precision accuracy

o fewer instructions are needed for many double precision math algorithms

o wider exponent range reduces slow denormal/subnormal handling

o exponent range is the same as IEEE quad precision, double extended could support quad precision

The x87 FSIN bug is due to not using an extended precision pi constant for example. The extended precision FPU is about as popular as CISC right now but the old tech was created for valid reasons and still fulfills a purpose. The extended precision 68k FPU could be improved with FMA that further reduces the number of instruction in math algorithms, half precision support which further takes advantage of 68k immediate compression, a new rounding mode that allows easier double extended quad precision support, a new ABI that passes most FP args in floating point registers with any extras placed on the stack in extended precision (variables and function args need to be retained in extended precision), etc. VBCC currently stores function args in the precision of the args on the stack and the function loads them back in which is double rounding but that is what the 68k SystemV ABI specifies and storing all FPU args on the stack in extended precision would be very slow. I know how to fix the problem but the 68k was already too dead to motivate Volker to improve the 68k backend and that was before EOL emulation. I improved the 68k FPU support code while working within existing limitations but the work is not complete and fully IEEE or C99 compliant. Many of the extended precision issues apply to performing single precision in a double precision FPU too. At least the PPC ABI specifies passing function args in FPU registers even for single precision args which was the way to go until the standard PPC FPU was eliminated in the A1222 and 68k ABI stack args would have been better.

I hope my meandering and perhaps ranting explanation is more useful than confusing. It sounds like Mitch is trying to create a SIMD style FPU while we are more familiar with a classic FPU. Either way, rounding overhead is sometimes unavoidable. The classic FPU pipelines for it and gains the benefit of higher precision calculations while the SIMD FPU has more performance and consistency but perhaps greater precision conversion costs.

cdimauro Quote:

Exactly. So, either you need a complete set of instructions where you directly the define the precision or rounding mode, or you need a mechanism which allows you to override them on-the-fly.

On my new architecture I've normal instructions which use the rounding mode set in the status register, and longer instructions where it's directly set in the opcode. Problem solved, but costly (2 more bytes).

|

The 68k FPU encodings are not particularly compact but the extra encoding space was useful for adding the FSop and FDop instructions. They may seem free after lunch was already payed for. If you only support single and double precision, then it is only one encoding bit to support the two precisions. With the bit, it is possible to support SIMD style FPUs that perform single precision ops in single precision instead of converting to double precision like classic FPUs.

Hammer Quote:

Despite this, such PPC and MIPS VLE extensions weren't as good as Thumb-2 and 68k.

|

Thumb-2 was a big improvement in performance but not so much code density over Thumb. I was referring to PPC CodePack which predated Thumb-2 although PPC VLE in the Freescale/NXP PPC e200 core would have competed with newly introduced Thumb-2. PPC VLE uses both 16-bit and 32-bit encodings like Thumb-2. I have never seen independently performed statistics for VLE (or CodePack) code and it was only for e200 cores as far as I'm aware where ARM aggressively added Thumb-2 to their cores which were all low end low power cores. IBM only added CodePack to their lowest end PPC embedded cores as well. Today, ARM believes Thumb(-2) is only for the low end low power Cortex-M cores and no compressed ISA is necessary for higher end Cortex-A cores while RISC-V general purpose cores have almost all supported the optional compressed RISC-V encodings including OS distributions. Maybe it is due to how bad RISC-V code density is without the optional compressed support while ARM sees no threat from RISC-V compressed code which is nowhere near Thumb-2 or 68k code density. There is just not much code density competition anymore for ARM even though they are now doing what IBM and Freescale/NXP did by only adding compressed code support to the low end PPC embedded cores.

|

| | Status: Offline |

| |  cdimauro cdimauro

|  |

Re: Market Size For New Games requiring 68040+ (060, 080)

Posted on 9-Mar-2025 8:37:26

| | [ #160 ] |

| |

|

Elite Member

|

Joined: 29-Oct-2012

Posts: 4441

From: Germany | | |

|

| @matthey

Quote:

matthey wrote:

cdimauro Quote:

Because they were not enough: now you've 32 FPU registers (albeit mixed with data and SIMD registers).

That's because CISCs need much more registers compared to RISCs.

|