Your support is needed and is appreciated as Amigaworld.net is primarily dependent upon the support of its users.

|

|

|

|

| Poster | Thread |  Hans Hans

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 8:01:00

| | [ #61 ] |

| |

|

Elite Member

|

Joined: 27-Dec-2003

Posts: 5109

From: New Zealand | | |

|

| So many BS threads lately...

The only thing "wrong" with 68K is that Motorola/Freescale/whatever stopped actively developing it, and it therefore hasn't had anywhere near the level of improvement that it's main competitor the x86 has had.

Hans

_________________

Join the Kea Campus - upgrade your skills; support my work; enjoy the Amiga corner.

https://keasigmadelta.com/ - see more of my work |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 9:53:53

| | [ #62 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4716

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @Hans

Facts.

@matthey

Just for the avoidance of doubt, I consider the 68K ISA as the Lingua Franca for AmigaOS. Ideally we'd all be using it on top of whatever physical substrate we want. Only lower level kernel components, drivers, datatypes etc. truly need to be native. Most application code is not compute bound (especially on the Amiga where compute bound code can be an impediment to multitasking).

As for your reasons for thinking there's a viable market, 8-bit MCUs continue to be used in embedded applications because the applications they are used for are ideally suited. Your only segment where a modern 68K implementation might be viable puts you in direct competition with multiple generations of ARM processor, from 32-bit single core to 64-bit multi core with all the extension trimmings. All boasting a huge library of existing software and tools, mass production, low cost, low power and decades of engineer experience in using them.

Good luck cracking that wide open.

Quote:

I believe there are people, even developers and investors, interested in seeing the 68k revived and modern implementations in silicon for historic reasons and for posterity sake. |

How is that a business model at all, let alone one worth an initial outlay measured in million?Last edited by Karlos on 12-Nov-2024 at 03:02 PM.

Last edited by Karlos on 12-Nov-2024 at 10:29 AM.

Last edited by Karlos on 12-Nov-2024 at 10:24 AM.

Last edited by Karlos on 12-Nov-2024 at 10:24 AM.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 10:34:07

| | [ #63 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4716

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| I love 68K but the fact is that the fastest 68K accelerator I can buy in 2024 is a virtual 68040 on ARM. If the OS and software (not to mention the Amiga hardware) doesn't care, why should I? Last edited by Karlos on 12-Nov-2024 at 10:36 AM.

Last edited by Karlos on 12-Nov-2024 at 10:34 AM.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  matthey matthey

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 21:30:17

| | [ #64 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2395

From: Kansas | | |

|

| Hans Quote:

The only thing "wrong" with 68K is that Motorola/Freescale/whatever stopped actively developing it, and it therefore hasn't had anywhere near the level of improvement that it's main competitor the x86 has had.

|

Are you talking about the 68k ISA/ABI, CPU core designs or both?

As far as the 68k ISA, the 68k has avoided following many of the x86 mistakes and has much less baggage which allows for smaller cores that can scale lower. The x86-64 and ARM64/AArch64 ISAs have thousands of instructions supported as standard. ARM64/AArch64 is a larger standard than the PPC standard with more instructions and registers yet not as large as the x86-64 standard. Even for PPC, there were attempts to castrate the ISA for embedded use by removing support like standard FPUs, simplifying MMUs and introducing a Variable Length Encoding (VLE) PPC ISA replacement in order to scale lower to compete with ARM Thumb(-2). Embedded PPC before it died ended up as a butchered mess compared to the old desktop PPC standard. Motorola/Freescale scaled down the 68k ISA into ColdFire because they knew the PPC couldn't scale so far. They made mistakes though by castrating too much, not making ColdFire a compatible subset of the 68k and not planning to ever scale it up so ignoring any plans for 64-bit support. The ColdFire ISA brought back a big chunk of the castrated 68k ISA but 68k compatibility could not be regained, scalability up was never a goal and the damage was already done. ColdFire had moderate success for the embedded market as did PPC but Freescale was losing market share with two very different ISAs that didn't scale well. The 68k was not only alive but the market leader for 32-bit CPU sales for embedded use up to about 2000. The 68060 was available from Motorola/Freescale/NXP up until 2015 after 21 long years of non-upgraded service despite the 1994 68k ISA, ridiculous 50MHz max clock rating for an 8-stage CPU undermining its price efficiency (performance/$), only 8kiB I+D caches (no L2 caches), only 32-bit data bus (cost savings for embedded use) and no integrated memory controller. The 68060 had embedded success because of performance efficiency (performance/MHz) that ARM did not have (low clocks speeds have advantages for embedded use), good power efficiency (performance/W) that blew the Pentium away, industry leading code density for cache efficiency, reduced memory bandwidth and tiny footprint systems and a very simple and easy to use 68k ISA with an in-order design that minimizes stalls for good performance with legacy 68k code and easy instruction scheduling (much easier instruction scheduling than the Pentium and PPC603 competition). The 68060 has so much potential that could be unlocked by utilizing the 8-stage pipeline and newer fab processes to clock it up, increasing the caches to 32kiB I+D, adding higher level caches, adding a 64-bit data bus, adding an integrated memory controller with support for modern memory, etc. Code density can be improved further and ColdFire compatibility can be improved by adding some of the ColdFire ISA improvements (MVS like MOVESX, MVZ like MOVEZX, BYTEREV like BSWAP, etc.).

The 68k ISA and even high performance cores like the 68060 scaled down better than PPC cores before the ColdFire attempt to scale it into MCU territory. ColdFire cores often had better performance than ARM cores for MCUs but low power and tiny cores are more important where Thumb(-2) has advantages and can scale lower. The 68k was better off competing above tiny cores with minimal code and scaling up but PPC was given priority where the 68k was at its best and PPC didn't scale down to well. The 68000 and CPU32 ISAs are reduced subset 68k ISAs that could have maintained 68k compatibility for embedded use. The Hyperion 68k AmigaOS 3.x is compiled for a 68000 target which only has 56 instructions. The 68060 has maybe 100-200 instructions. No SIMD unit saves a lot of instructions, transistors and register bits which I'm not convinced should be made standard in a 68k ISA anyway. Going back to earlier ISA standards actually is easier in some ways and saves a lot of resources for x86(-64) but they have the problem that too much software has already been upgraded to newer standards. This is part of the reason the x86 in-order Bonnell Atom and Larrabee GPGPU microarchitectures were abandoned and smaller and lower power for x86 cores. It is still possible and has other benefits like the in-order Vortex86 cores being immune to many vulnerabilities.

https://linuxgizmos.com/vortex86-cpus-gain-new-life-with-linux-kernel-detection-and-a-3-5-inch-sbc/ Quote:

ICOP notes that due to their in-order design and lack of CLFLUSH instructions, Vortex86 CPUs are not vulnerable to Spectre and Meltdown attacks. In addition, says ICOP, âThe Linux kernel has properly catered to Vortex86 and identified them correctly applying the no performance-hitting mitigations.â

|

The 68060 is also a relatively simple in-order CPU with limited speculation and sequential ordering of memory accesses.

M68060 Userâs Manual Quote:

7.10 BUS SYNCHRONIZATION

The MC68060 integer unit generates access requests to the instruction and data memory

units to support integer and floating-point operations. Both the fetch and write-back

stages of the integer unit pipeline perform accesses to the data memory unit. All read and

write accesses are performed in strict program order. Compared with the MC68040, the

MC68060 is always âserializedâ. This feature makes it possible for automatic bus synchronization

without requiring NOPs between instructions to guarantee serialization of reads and

writes to I/O devices.

|

This is far from the case for most limited OoO PPC CPU cores or RISC cores in general. The 68060 design has good performance that competed well against early limited OoO shallow PPC core designs like the PPC603(e) which was scaled up into the PPC G3 design. The case can be made for simpler in-order CPU cores and simpler ISAs. The 68060 has good performance with a relatively simple and small in-order CPU core, limited speculation and sequential ordering, simple and small ISA, small code and very easy programming. The 68060 design was more of a balanced design for embedded use than a high performance desktop design like the Pentium. There is a lot that can be done to increase the performance which may be able to compete with low end RISC OoO cores like it did against limited OoO PPC cores. Some 68k ISA improvements and a 68k64 mode/ISA are desirable but x86-64 should be used as a bad example of adding massive baggage that is to be avoided. Even ARM64/AArch64 isn't the simple and small ARM that conquered the embedded market. No, it was ARM Thumb(-2) with 68k like code density developed from licensing SuperH from Hitachi who was a 2nd source producer of the 68000.

Karlos Quote:

As for your reasons for thinking there's a viable market, 8-bit MCUs continue to be used in embedded applications because the applications they are used for are ideally suited. Your only segment where a modern 68K implementation might be viable puts you in direct competition with multiple generations of ARM processor, from 32-bit single core to 64-bit multi core with all the extension trimmings. All boasting a huge library of existing software and tools, mass production, low cost, low power and decades of engineer experience in using them.

Good luck cracking that wide open.

|

The original ARM ISA had neither good performance or code density. ARM decided they needed an ISA with better code density for the embedded market. Thumb(-2) was a copy of SuperH with improvements but SuperH was a poor copy of the 68k which is still superior. ARM Thumb(-2) has 68k like code density but weak performance. It was enough to conquer the embedded market with PPC as the competition and the 68k hidden in the basement. ARM decided they wanted an ISA with more performance so the ARM64/AArch64 ISA was created by copying the higher performance PPC and improving it like they had done by copying SuperH and improving it for Thumb(-2). ARM64/AArch64 cores are larger than PPC cores that were trying to scale down to compete with Thumb(-2). Between the very low end weak Thumb(-2) and the fat for embedded ARM64/AArch64 high end is the vulnerable point to drive a wedge. ARM64/AArch64 is a giant with momentum but it is more vulnerable than x86-64 due to lack of software for its targeted markets. Even the RPi doesn't have much software that couldn't be ported to other platforms and it relies on emulation for games too much.

Karlos Quote:

How is that a business model at all, let alone one worth an initial outlay measured in million?

|

I didn't say it was a business model. I'm just saying that there are 68k fans and developers who could and likely would help support an effort to revive the 68k. A $6,000 bounty was raised to save the 68k GCC backend with not much news or fanfare. Yes, it is not much but then it was just a compiler backend. The 68k nostalgia is an advantage like the nostalgia of retro computing has become. There are people who would donate toward faithful Amiga hardware development as well. I saw it with the Natami project where people tried to financially support Thomas but he did not accept donations or investments. Developers were allowed to help where they could and there were more developers, both ex and active, on the Natami forum than I have seen since Commodore days. This is different than trying to introduce something new like the Amiga when it was introduced. It's not like trying to introduce a new or do over RISC ISA which is more difficult. Even RISC-V has had slow adoption and development which I believe the 68k could easily catch up to.

Karlos Quote:

I love 68K but the fact is that the fastest 68K accelerator I can buy in 2024 is a virtual 68040 on ARM. If the OS and software (not to mention the Amiga hardware) doesn't care, why should I?

|

Hardcore fans may not care about value as much as fair weather fans. The fair weather fans are the masses that can increase the user base and encourage development though. You probably don't know what you are missing too.

Last edited by matthey on 12-Nov-2024 at 09:33 PM.

Last edited by matthey on 12-Nov-2024 at 09:31 PM.

|

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 22:06:10

| | [ #65 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4716

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| @matthey

Quote:

| Hardcore fans may not care about value as much as fair weather fans. The fair weather fans are the masses that can increase the user base and encourage development though. You probably don't know what you are missing too. |

I have 68000, 68020 and 68040 machines and my only regret is I never got a 68060 because now they are ludicrously expensive and difficult to obtain.

_________________

Doing stupid things for fun... |

| | Status: Offline |

| |  matthey matthey

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 22:37:22

| | [ #66 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2395

From: Kansas | | |

|

| Karlos Quote:

I have 68000, 68020 and 68040 machines and my only regret is I never got a 68060 because now they are ludicrously expensive and difficult to obtain.

|

When the 68060 first came out, it was not very popular for the Amiga because it was less compatible than a 68040, mostly because of missing instructions. Support eventually improved and they became better than a 68040. Prices became really cheap with them sometimes going for under $50 USD compared to maybe ten times that for a rev 6 68060 today. We have several new 68060 accelerators and more are on the way. PiStorms and V4 hardware haven't even been able to kill the 68060 market. The old dying Amiga hardware hasn't been able to either. It's crazy. The LLVM 68k developers were impressed with 68k support, especially from the Amiga community.

[llvm-dev] [RFC] Backend for Motorola 6800 series CPU (M68k)

https://groups.google.com/g/llvm-dev/c/GyEXbuKpuVE John Paul Adrian Glaubitz Quote:

Adding to this: Despite its age, the Motorola 68000 is still a very popular architecture due to the

fact that the CPU was used by a wide range of hardware from the 80s throughout the 2000s. It is

used in the Amiga, Atari, Classic Macintosh, Sega MegaDrive, Atari Jaguar, SHARP X68000, various

Unix workstations (Sun 2 and 3, Sony NeWS, NeXT, HP300), many arcade systems (Capcom CPS and CPS-2)

and more.

As many of these classic systems still have very active communities, especially the Amiga community,

development efforts are still very strong. For example, despite being the oldest port of the Linux

kernel, the m68k port has still multiple active kernel maintainers and is regularly gaining new

features and drivers. There are companies still developing new hardware around the CPU (like

Individual Computers, for example) like network cards, graphics adapters or even completely

new systems like the Vampire.

...

Yes, I fully agree. To underline how big the community is, let me just share

a short anecdote. Previously, the GCC m68k backend had to be converted from

the CC0 register representation to MODE_CC. We collected donations within the

Amiga and Atari communities and within just a few weeks, we collected over

$6000 which led to an experienced GCC engineer with m68k background to finish

that very extensive work in just a few weeks.

So, I think in case there was a problem with the backend in LLVM, the community

would have enough momentum to work towards solving this issue.

|

There is 68k support which can be ignored until it disappears or the momentum can help provide support for a hardware project. So far, the Amiga story has been the Neverland of missed opportunities but maybe there will be another chance with Ben the road bump out of the picture. One or two more road blocks turned into road bumps may do it.

Last edited by matthey on 12-Nov-2024 at 10:40 PM.

|

| | Status: Offline |

| |  OneTimer1 OneTimer1

|  |

Re: what is wrong with 68k

Posted on 12-Nov-2024 23:32:45

| | [ #67 ] |

| |

|

Super Member

|

Joined: 3-Aug-2015

Posts: 1113

From: Germany | | |

|

|

Quote:

matthey wrote:

When the 68060 first came out, it was not very popular for the Amiga because

|

it was not available in production numbers, Phase5 had their Accelerator ready but couldn't produce it.

Later it still wasn't very popular because all the companies producing accelerator cards targeted the less popular Amiga models like the A4000 or A3000.

The most popular Amiga was the A500, maybe they should have supported this first. |

| | Status: Offline |

| |  Hans Hans

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 1:38:25

| | [ #68 ] |

| |

|

Elite Member

|

Joined: 27-Dec-2003

Posts: 5109

From: New Zealand | | |

|

| @matthey

Quote:

| Are you talking about the 68k ISA/ABI, CPU core designs or both? |

You're way overthinking this.

If Motorola/Freescale had been able to put the same amount of effort into the 68K series as has gone into x86, then we'd have 64-bit 68K variants by now with similar performance to the latest x64 CPUs. And if that had happened, then we wouldn't be talking about AmigaOS on PowerPC because we'd never have wanted to migrate away from the 68K ecosystem (and MacOS wouldn't have become PowerPC either).

Hans

_________________

Join the Kea Campus - upgrade your skills; support my work; enjoy the Amiga corner.

https://keasigmadelta.com/ - see more of my work |

| | Status: Offline |

| |  Hammer Hammer

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 4:04:07

| | [ #69 ] |

| |

|

Elite Member

|

Joined: 9-Mar-2003

Posts: 6058

From: Australia | | |

|

| @matthey

Quote:

@matthey

PPC silicon is showing its age and it is in MUCH better shape than 68k silicon. Gains below about 28nm are diminishing.

|

The cache is getting larger as the gap between CPU and external memory grows.

X3D is important for industry-leading gaming CPUs and very important for BVH geometry setup processing.

https://www.techpowerup.com/review/amd-ryzen-7-9800x3d/17.html

Ryzen 7800X3D 8 cores/16 threads is 52 percent average faster than 10 cores/20 threads Core i9-11900K (Rocket Lake microarchitecture)

A GPU only renders the game's viewport while the CPU computes the game world's state.

Powerful GPU doesn't reduce the need for powerful CPUs._________________

Amiga 1200 (rev 1D1, KS 3.2, PiStorm32/RPi CM4/Emu68)

Amiga 500 (rev 6A, ECS, KS 3.2, PiStorm/RPi 4B/Emu68)

Ryzen 9 7950X, DDR5-6000 64 GB RAM, GeForce RTX 4080 16 GB |

| | Status: Offline |

| |  matthey matthey

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 6:05:02

| | [ #70 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2395

From: Kansas | | |

|

| OneTimer1 Quote:

it was not available in production numbers, Phase5 had their Accelerator ready but couldn't produce it.

|

The 68060 priority was lowered to the basement level shortly before production.

Motorola Introduces Heir to 68000 Line

https://websrv.cecs.uci.edu/~papers/mpr/MPR/ARTICLES/080502.pdf Quote:

The â060 was designed from the beginning as a modular processor. It can be stripped down or enhanced in various ways to meet price and performance needs. Versions proposed within Motorola, but not guaranteed to be put into production, include:

o 68060Lite - single integer pipeline, no FPU, cache sizes reduced, similar to 68LC040 but less costly.

o 68060Sâinstruction set reduced but still compatible with 68000, smaller caches, may integrate customer specific logic.

o 68060+ - undisclosed architectural enhancements that increase performance 20â30% independent of clock frequency.

Process improvements will bring further speed gains and, perhaps more important, cost reduction.

...

Price & Availability

The 50-MHz 68060, 68LC060, and 68EC060 are now sampling to selected beta sites. General samples will be available 3Q94, with production to follow late in that quarter. Prices are $263, $169, and $150, respectively, in 10,000-unit quantities. Production quantities of the 66-MHz 68060 will be available late in 4Q94, according to the company; no price has been announced. For further information, contact Motorola at 512.891.2917.

|

This is a Vol. 8, No. 5, April 18, 1994 Microprocessor Report with 68060 plans before political changes going into production. The 8-stage 68060 had better integer performance efficiency (performance/MHz) and could be clocked up more than the 4-stage PPC603 which was only on the market for months before a PPC603e was rushed to market with double the caches and a die shrink to improve the performance and clock speed but this made the PPC603e use more transistors than the 68060 and use a more expensive fab process than the 68060. Shallow pipeline PPC CPUs were going to get very expensive if they needed a die shrink every time the 68060 clock rate was increased to stay ahead in performance. A deeper pipeline allows a cheaper fab process to be used to achieve a similar clock speed.

Despite all the 68060 plans from the Microprocessor Report, Only the LC and EC parts for the embedded market had any priority and were the only development of the 68060 despite the modular design and extra effort to streamline development and testing.

https://www.semanticscholar.org/paper/The-superscalar-architecture-of-the-MC68060-Circello-Edgington/673ed0eb6984ccfd1ec3b2fb39f144d58b3958f9 Quote:

We designed the chip to be power-thrifty and production testable, as well as modular and scalable. A static design based on edge-triggered fully scannable flip-flops, it employs design implementation strategies to reduce dynamic switching. The MC68060 allows a selective enable and disable of functionality to aid engineering test and analysis and to enhance the yield of derivative parts. It's scalable design can move to higher frequencies to keep pace with process improvements without requiring major architectural modifications.

The initial implementation of the microprocessor is a 2.5 million transistor design that runs at 50 or 66 MHz. It typically dissipates approximately 4.5W of power at 66MHz.

|

With all the talk of a 68060@66MHz, I've never seen a full one with MMU and FPU. Clocking up the 68060 improves the overall performance and price efficiency (performance/$) where the 68060 was already beating the PPC603 in performance efficiency (performance/MHz). It is a disgrace and a waste to deliberately suppress a very good design for political reasons.

OneTimer1 Quote:

Later it still wasn't very popular because all the companies producing accelerator cards targeted the less popular Amiga models like the A4000 or A3000.

The most popular Amiga was the A500, maybe they should have supported this first.

|

Customers who bought the Amiga 500 chose a low price over expandability and upgradeability. It is partly Commodores fault by not having a high end chipset to improve the value of high end Amigas.

Hans Quote:

You're way overthinking this.

If Motorola/Freescale had been able to put the same amount of effort into the 68K series as has gone into x86, then we'd have 64-bit 68K variants by now with similar performance to the latest x64 CPUs. And if that had happened, then we wouldn't be talking about AmigaOS on PowerPC because we'd never have wanted to migrate away from the 68K ecosystem (and MacOS wouldn't have become PowerPC either).

|

Motorola/Freescale could not put the same effort into the 68k (or PPC) as Intel did x86. More 68k CPUs were sold than x86 CPUs some years but they were mostly low margin embedded CPUs where x86 desktop CPUs were much higher margin. If Commodore had become a major desktop player with the 68k Amiga then maybe Motorola/Freescale would have had a chance but Commodore decided to practically ignore the high end desktop market by not developing a high end chipset (Ranger) and using low end embedded 68k CPUs. Motorola/Freescale played their hand poorly too. They could not conquer the desktop with either the 68k or PPC after the x86 monster was at full power but they could have retained the embedded market by not betting the farm on PPC and keeping the 68k alive with ARM like upgrades, product variations and support. The 68060 is a good example of a high performance in-order CPU that could have remained competitive with incremental upgrades. The 68k is more efficient than x86 due to a better and more orthogonal ISA with better code density. It has a performance advantage over most weak embedded ISAs including ARM, Thumb(-2), SuperH, MIPS, etc, up until ARM64/AArch64 and PPC where it remains competitive in performance and has better code density. The 68k created the 32-bit embedded market and the 32-bit workstation market which is unprecedented scalability from low end to high end. Motorola/Freescale threw their baby out with the bath water and became ignorant PPC zealots. The AIM Alliance wasn't good for them or Apple but maybe IBM.

|

| | Status: Offline |

| |  kolla kolla

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 6:16:17

| | [ #71 ] |

| |

|

Elite Member

|

Joined: 20-Aug-2003

Posts: 3276

From: Trondheim, Norway | | |

|

| @matthey

Quote:

| Some Amiga users once thought recreating an Amiga in a FPGA was a hoax too. |

Do you remember how they were proven wrong?_________________

B5D6A1D019D5D45BCC56F4782AC220D8B3E2A6CC |

| | Status: Offline |

| |  Hans Hans

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 7:04:29

| | [ #72 ] |

| |

|

Elite Member

|

Joined: 27-Dec-2003

Posts: 5109

From: New Zealand | | |

|

| @matthey

I think that Motorola could have kept the 68K competitive if it had been their main focus. Instead they got bitten by the "RISC is better bug," resulting in the 88000 series, which they then dumped in favour of the PowerPC when Apple convinced them to.

From what I heard, Motorola bet almost the entire company on satellite phone communications, and funded Iridium which cost them ~$6 billion. They incorporated Iridium Inc. back in 1991, so this was happening round the same time as PowerPC. Unfortunately for them, cell-phone coverage grew exponentially, wiping out most of the market for a satellite phone by the time that the Iridium service was available in 1998. It was a very expensive flop.

$6 billion could have paid for a lot of 68K development, which would probably have given a better return on investment...

As if that wasn't enough, Motorola were were also involved in the mobile phone market, and probably other stuff too.

Hans

_________________

Join the Kea Campus - upgrade your skills; support my work; enjoy the Amiga corner.

https://keasigmadelta.com/ - see more of my work |

| | Status: Offline |

| |  BigD BigD

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 8:32:45

| | [ #73 ] |

| |

|

Elite Member

|

Joined: 11-Aug-2005

Posts: 7466

From: UK | | |

|

| @Hans

This timeline is pretty good whereby ARM catches up and PiStorms and FPGA 68080 cores continue Motorola's legacy! _________________

"Art challenges technology. Technology inspires the art."

John Lasseter, Co-Founder of Pixar Animation Studios |

| | Status: Offline |

| |  kolla kolla

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 8:40:03

| | [ #74 ] |

| |

|

Elite Member

|

Joined: 20-Aug-2003

Posts: 3276

From: Trondheim, Norway | | |

|

| @BigD

And with "PiStorm" you mean Raspberry Pi with Emu68⦠_________________

B5D6A1D019D5D45BCC56F4782AC220D8B3E2A6CC |

| | Status: Offline |

| |  Karlos Karlos

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 10:49:12

| | [ #75 ] |

| |

|

Elite Member

|

Joined: 24-Aug-2003

Posts: 4716

From: As-sassin-aaate! As-sassin-aaate! Ooh! We forgot the ammunition! | | |

|

| | | Status: Offline |

| |  BigD BigD

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 11:15:45

| | [ #76 ] |

| |

|

Elite Member

|

Joined: 11-Aug-2005

Posts: 7466

From: UK | | |

|

| @kolla

Yep, and I seem to have summarised the titanic effort of Michal down to just the achievements of the CPU itself and the machine god! That is in the spirit of these threads as the PPC-disciple has shown us the way! ð _________________

"Art challenges technology. Technology inspires the art."

John Lasseter, Co-Founder of Pixar Animation Studios |

| | Status: Offline |

| |  OlafS25 OlafS25

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 11:16:29

| | [ #77 ] |

| |

|

Elite Member

|

Joined: 12-May-2010

Posts: 6461

From: Unknown | | |

|

| @BigD

I do not see a realistic niche market for another processor. ARM is now covering everything that needs low prices at acceptable performance. When you need power you use AMD or INTEL processors or POWER9 if you use hardware from IBM. There is also the embedded market where also PPC was used but I very much assume thatn it will be replaced by ARM. I think if I remember right the company acquiring the company behind coldfire also changed to ARM. Even if somebody did a better processor it would be difficult to establish against the already ARM dominated market. 68k now is a nice technolgoy for us, be it as real hardware, emulation or other implmentations. But it is retro now.

@Matthey

you invest lots of time in your posts and always talk about investors. But creating a new platform and even a new processor is both risky and expensive. Nobody bets his retirement on something like this. It is typical for venture investors who invest in several projects and when only one succeeds it is profitable. But that you do only with money you do not necessarily need.

But if you want, start. Make a business plan and try to find investors. |

| | Status: Offline |

| |  OlafS25 OlafS25

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 11:37:30

| | [ #78 ] |

| |

|

Elite Member

|

Joined: 12-May-2010

Posts: 6461

From: Unknown | | |

|

| @Hans

Problem was, I think, that Apple was the biggest customer. Commodore would have been too small. And at that time, dropping 68k in favor of PPC seemed to be the most secure plan because also IBM involved so less investment needed. And opinion was generally that RISC processors would win the competition and classical CISC processors like the INTEL processors would loose. Seen from today wrong decision but decisions are always done with assumptions of future. In this case wrong assumptions. |

| | Status: Offline |

| |  OneTimer1 OneTimer1

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 12:55:11

| | [ #79 ] |

| |

|

Super Member

|

Joined: 3-Aug-2015

Posts: 1113

From: Germany | | |

|

| Quote:

Hans wrote:

I think that Motorola could have kept the 68K competitive if it had been their main focus. Instead they got bitten by the "RISC is better bug," ...

|

The worst bug for us was the Coldfire bug, selling the incompatible Coldfire as an 68k successor was a mess. It was the same stupid behavior that let them build a PPC/Power Chip with an incompatible FPU.

Quote:

Hans wrote:

resulting in the 88000 series, which they then dumped in favour of the PowerPC when Apple convinced them to.

|

Most of their big customers from the workstation market started to build their own RISC chips, because Motorola was unable to meet their requirements, just think at companies like Sun, Hewlett Packard.Last edited by OneTimer1 on 13-Nov-2024 at 01:05 PM.

Last edited by OneTimer1 on 13-Nov-2024 at 12:55 PM.

|

| | Status: Offline |

| |  matthey matthey

|  |

Re: what is wrong with 68k

Posted on 13-Nov-2024 19:24:38

| | [ #80 ] |

| |

|

Elite Member

|

Joined: 14-Mar-2007

Posts: 2395

From: Kansas | | |

|

| Hammer Quote:

The cache is getting larger as the gap between CPU and external memory grows.

|

Yes. That has been a Moore's Law effect but Moore's Law is coming to an end.

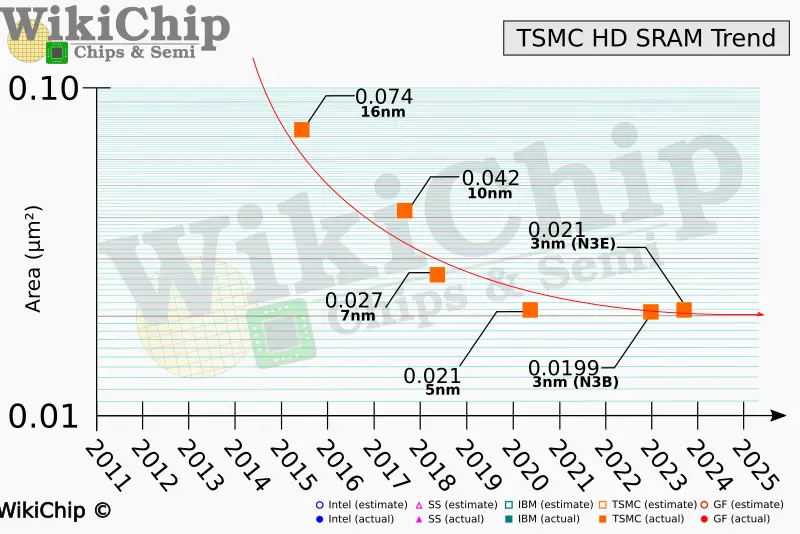

SRAM is no longer scaling like it used to.

https://fuse.wikichip.org/news/7343/iedm-2022-did-we-just-witness-the-death-of-sram/

TSMC's N2 production node reportedly shrinks the HD SRAM bit cell size to around 0.0175 µm^2 so SRAM is still scaling for now but the trend is ominous with major implications for CPU and GPU development and chip fabrication. Modern CPU performance has largely come from SRAM caches and OoO buffers/queues.

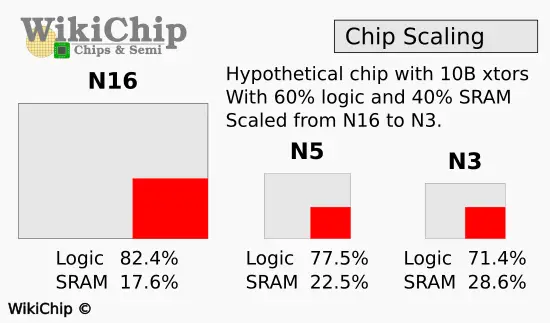

CPU OoO performance improvement can be expected to slow down and become more expensive and code density becomes more important. I believe this has negative implications for the ARM64/AArch64 strategy of quickly catching up with x86-64 using expensive OoO designs on smaller node sizes which I'm not sure the embedded market can support and sustain. ARM has been increasing the licensing and royalty "taxes" to pay for their expensive war on the x86-64 desktop which could cause an embedded rebellion. I do not believe ARM64/AArch64 can dethrone x86-64 from the desktop market due to lack of software and software compatibility. CISC cores gain a small but growing advantage due to decreasing SRAM scaling as the more complex logic compared to RISC cores is decreasing in cost relative to SRAM cost and the logic used to improve code density has synergies with the cache savings for reducing the SRAM used. ARM64/AArch64 uses a simpler fixed length 32-bit encoding which decreases the decoding logic instead of improving code density to decrease SRAM caches. Of course x86-64 only has mediocre code density wasting SRAM, may use pre-decode bits in caches wasting SRAM and may use micro-op/loop caches wasting SRAM. A CISC ISA with better code density and decreased decoding overhead would gain more of an advantage, perhaps one that could allow a high performance and efficient in-order CPU core with beefed up logic to compete with lower end OoO cores that are maybe 10 times the size, much of it for SRAM OoO buffers/queues. Moore's Law is over and good efficient core designs should become more competitive against quickly developed designs, propaganda and politics.

Hans Quote:

I think that Motorola could have kept the 68K competitive if it had been their main focus. Instead they got bitten by the "RISC is better bug," resulting in the 88000 series, which they then dumped in favour of the PowerPC when Apple convinced them to.

|

Pretty much every major CPU developer experimented with RISC architectures and for good reason. RISC philosophies overall provided better performance gains than CISC philosophies, some of which were outdated and illogical. There were some flawed RISC philosophies and the performance didn't scale but the simpler quicker to develop CPUs were an advantage with Moore's Law.

The 88k was fine as a R&D project and Motorola allowed it to stand on its own when it was commercialized rather than pushing PPC at the expense of everything else without technical evaluation considerations. The RISC market was too divided hurting the 88k chances but there were other mistakes. Architect Mitch Alsup said the greatest 88k mistake was not making the architecture 64-bit from the beginning. The 88k CPUs were modular requiring multiple chips like many other higher end RISC CPUs so there wasn't much disadvantage to increasing to 64-bit which was an advantage for higher end markets like workstations and for the SIMD functionality (68k CPUs were feature rich single chip MPUs with all the advantages and disadvantages this brought). A multi-chip 64-bit 88k workstation replacement for the single chip 32-bit 68k that was losing competitiveness due to 68040 development and production issues would have covered the market better with less competition between the 68k and 88k. Interestingly, 88k was replaced by PPC which was also one of the slower RISC architectures to move to 64-bit. Intel experimented with RISC, VLIW and other architectures including trying to kill off 808x/x86 at least twice, with the iAPX 432 and much later Itanium architectures which were very expensive mistakes but they went back to their ugly baby unlike Motorola who abandoned their beautiful baby and never looked back.

Hans Quote:

From what I heard, Motorola bet almost the entire company on satellite phone communications, and funded Iridium which cost them ~$6 billion. They incorporated Iridium Inc. back in 1991, so this was happening round the same time as PowerPC. Unfortunately for them, cell-phone coverage grew exponentially, wiping out most of the market for a satellite phone by the time that the Iridium service was available in 1998. It was a very expensive flop.

$6 billion could have paid for a lot of 68K development, which would probably have given a better return on investment...

|

Billion dollar losses on one project are devastating all right. Even if the Iridium loss was avoided and translated to more capital in the chip division, I'm afraid it would have resulted in more PPC development and die shrinks to try to make shallow pipeline PPC cores more competitive. Motorola had leadership/management problems.

Hans Quote:

As if that wasn't enough, Motorola were also involved in the mobile phone market, and probably other stuff too.

|

Motorola started with car radios and created the first walkie talkies and cell phones. They transitioned from analog to digital including their chip division producing the 6800 and 68k architectures (they could have had the 6502 too). They were early to the game, vertically integrated and well positioned for the digital cellular market much like Commodore was for the personal computer market. The difference between good leadership/management and bad leadership/management is huge and why big business CEOs make millions of dollars a year but you don't always get what you pay for.

OlafS25 Quote:

you invest lots of time in your posts and always talk about investors. But creating a new platform and even a new processor is both risky and expensive. Nobody bets his retirement on something like this. It is typical for venture investors who invest in several projects and when only one succeeds it is profitable. But that you do only with money you do not necessarily need.

But if you want, start. Make a business plan and try to find investors.

|

Creating new architectures is very difficult which is why I propose reviving existing and still supported architectures and cores. I'm suggesting a major project for micro-businesses but nothing like the Iridium satellite project or even the original project to bring the 68k Amiga to market. Major market players like ARM Holdings likely wouldn't even notice any more than they did for the original RPi project even though they are now a major investor in RPi.

OneTimer1 Quote:

The worst bug for us was the Coldfire bug, selling the incompatible Coldfire as an 68k successor was a mess. It was the same stupid behavior that let them build a PPC/Power Chip with an incompatible FPU.

|

The unnecessary 68k incompatibility was worse for ColdFire customers and the Motorola/Freescale embedded market share than the 68k.

High-Performance Internal Product Portfolio Overview Issue 10 Fourth Quarter, 1995 Quote:

Target Markets/Applications:

The XCF5102 is fully ColdFire code compatible. As the first chip in the ColdFire Family, it has been designed with special capabilities that allow it to also execute the M68000 code that exists today. These extensions to the Coldfire instruction set allow Motorola customers to utilize the XCF5102 as a bridge to future ColdFire processors for applications requiring the advantages of a variable-length RISC architecture. Compatibility with existing development tools such as compilers, debuggers, real-time operating systems and adapted hardware tools offers XCF5102 developers access to a broad range of mature tool support; enabling an accelerated product development cycle, lower development costs and critical time-to-market advantages for Motorola customers.

|

The XCF5102 ColdFire chip was originally called 68040VL with a 6-stage 68040 pipeline but with the improvement of a decoupled instruction fetch pipeline and execution pipeline as introduced in the 68060 and found on later ColdFire cores. The VL in 68040VL stands for Variable Length RISC which is the marketing name for the castrated 68k VLE. The XCF5102 "bridge to future ColdFire processors" turned into a burned bridge for 68k compatibility even though ColdFire later added back a large chunk of the 68k castration. Full ColdFire compatibility on the 68k is impossible but high compatibility with user mode programs is possible and later new ColdFire instructions mostly used encodings that were free on the 68k. MVS and MVZ instructions improve 68k code generation and code density and are a compiler switch away as 68k and ColdFire compiler backends are usually shared. The AC68080 brought them back after a stealth change to the AC68080 ISA. I had been a proponent of MVS and MVZ instructions early in development of the AC68k and Gunnar had them implemented only to decide they weren't worth the encoding space and remove them only to bring them back fairly recently. This required removing or relocating conflicting AC68080 instructions but I didn't hear much complaining about incompatibility so his conflicting instruction(s) likely weren't used much (I recall a register bank switching instruction to add more registers). One advantage of an ISA in FPGA is that mistakes can be fixed.

Last edited by matthey on 13-Nov-2024 at 07:38 PM.

|

| | Status: Offline |

| |

|

|

|

[ home ][ about us ][ privacy ]

[ forums ][ classifieds ]

[ links ][ news archive ]

[ link to us ][ user account ]

|